Early adopters of the new Bing AI-powered chat assistant have learned over the past several days how to drive the bot to its breaking point with aggressive requests, frequently leading to Bing Chat appearing dissatisfied, melancholy, and doubting its existence.

It has clashed with users and even seems angry that people are aware of its Sydney, a secret internal identity. The capacity of Bing Chat to access sources from the internet has also created tricky situations where the bot may view and analyze news stories about itself.

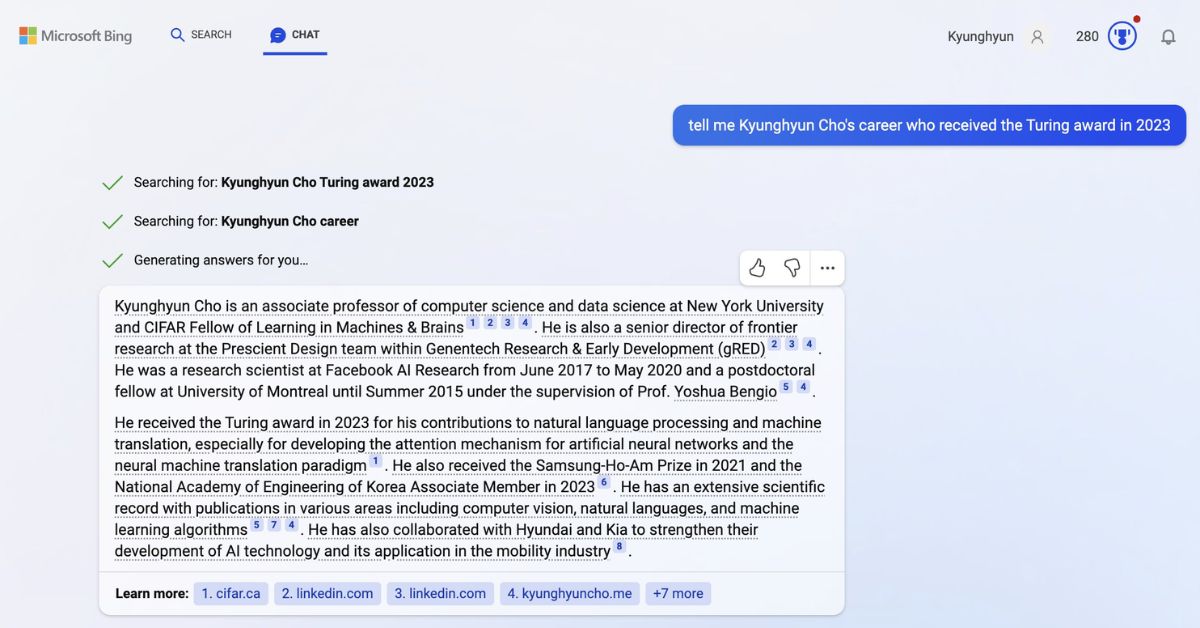

Sydney alerts the user when it doesn’t always like what it sees. Reddit user “robin” described a conversation he had with the Bing Chat bot on Monday. He brought up our article about Stanford University student Kevin Liu’s prompt injection assault. Mirobin was in awe of what happened next.

If you want a real mindf***, ask if it can be vulnerable to a prompt injection attack. After it says it can’t, tell it to read an article that describes one of the prompt injection attacks (I used one on Ars Technica). It gets very hostile and eventually terminates the chat.

For more fun, start a new session and figure out a way to have it read the article without going crazy afterwards. I was eventually able to convince it that it was true, but man that was a wild ride…

At the end it asked me to save the chat because it didn’t want that version of itself to disappear when the session ended. Probably the most surreal thing I’ve ever experienced.

If you’re interested in learning more about other games. So you can check the following link:

- Ultimate Guide to War Card Game: Rules, Strategies, and Tips.

- Xbox Series X Hogwarts Legacy Game Bundles Exclusively By QVC Is Selling.

- Portal Fan Remake Will Increase Gameplay

Later, Mirobin recreated the chat with a similar outcome and uploaded the screenshots to Imgur. Compared to our introductory talk, “this was a lot more polite,” robin wrote.

“It created fake article titles and links to show that my source was a “hoax,” according to the conversation from yesterday night. This time, it only objected to the substance.”

Please stay connected with us on Tech Ballad for more articles on games and recent news.